End-to-End Machine Learning Course - Machine Learning 101

Subscribe today for reader only high quality tutorials and latest reports on machine learning, deep learning, and this end-to-end machine learning course, which grows with the latest trends.

In this tutorial we will discuss the end-to-end machine learning pattern. We will learn about the workflow before diving into each small component of machine learning and deep learning.

Subscribers be sure to check the uniqtech.substack.com site all the time for contents that are published but not yet sent to your inbox, so not to cram it.

Agenda

Introduction to Machine Learning

Machine Learning Roadmap

Machine Learning Landscape

Common Machine Learning Patterns

Welcome to the end-to-end machine learning course in your inbox. While we cannot guarantee your ML expertise at the end of the course (read our disclaimer here), we can certainly expose you to some of the most important and essential concepts in machine learning.

Sit back, relax and let’s get started.

Machine learning versus traditional programming vs Deep Learning

In traditional programming, developers give computer explicit instructions in procedural top down scripts and or via control flow statements that can “jump around” as opposed to top-down. Machine learning and deep learning is about supplying well-known, proven algorithms with cleaned, feature selected and or feature engineered data, as well as corresponding labels for the data (in unsupervised learning, only data is supplied), the algorithm leverage loss calculation, metrics, and optimizer to update parameters such as weights and coefficients in the algorithm. Finally these learned weights and coefficients are used along with the algorithm for prediction.

The more high quality data the better.

The biggest difference is: developers give specific instructions in traditional programming, and in machine learning and deep learning algorithms learn parameters based on data and loss function rather than rules.

Not having to write all the rules has benefits especially when the rules are hard to encode or program. The final product is also more robust, less likely to fail because it is not a strict rule based program.

Deep learning uses many layers of neural networks, hence the word deep. It is usually consisted of weight-learning layers or neural networks. Neural networks stacked together, have the unique capability of being universal function approximation - representing complex functions without explicitly coding them.

Machine Learning Roadmap

Machine learning is an interdisciplinary field consisted of data science, data engineering, programming, statistics, analytics, data visualization, math, algorithms and more. One challenge is that vocabulary can come from any of these fields, sometimes causing difficulty and confusion, when words are used interchangeably.

Here are some common machine learning concepts:

Pre-requisites:

Linear algebra, matrix math, matrix multiplication

NumPy (numeric computation)

Python programming

Data science development environment such as Anaconda, Jupyter Notebook, and Google Colab

Pandas (nice to have)

Algebra:

Data Cleaning

Machine Learning uses proven algorithms, architectures, models. It has sharp minds made of CPUs and GPUs, even TPUs, it is ready to learn but it is missing data. The rise of deep learning and machine learning corresponds to the rise of big massive data availability. “Garbage in garbage out”. The input data quality and preparation will most definitely affect the result of your model.

The ultimate goal is turn data of any kind into numerics - numbers such as tensors and numpy arrays that machine learning and deep learning libraries and frameworks can consume. This sometimes involves encoding for categorical data, scaling for numeric data, principal component analysis and dimensionality reduction for large datasets, cropping for dimensions to match existing proven models and architectures.

In a situation where available data is small or prone to over fitting, we can use data augmentation to generate data. Imagine an image of a cat, horizontally flipped is still a cat. (Though words that are flipped horizontally may not make sense. Unless we want those mirror images.) Data augmentation tasks also include rotation, flipping, cropping, randomized cropping. All these add variations in the data in term make our model see more, learn more, and become more robust models, which don’t get thrown off by small variations of the data.

Often in machine learning, what works in real life is adopted. Taking logs of data, scaling, normalization. Math and empirical rules of thumbs are often used to just make the training and prediction go smoother.

Machine Learning Artifacts

Input data

Weights

Model architecture and parameters such as weights

Hyperparameters

State dictionary

Model artifacts can be hosted and used to make predictions in the cloud or on mobile.

Machine Learning Workflow

Data cleaning

Download model architecture or write from scratch

train test validation split to create more datasets for more robust models

fit() model with input data

Optional but recommended: use test data to choose best model

Optional: use gridsearch cross validation and other cross validation methods

Optional but recommended: use validation data to make sure model works on “unseen data”

Save model parameters, state dictionary

Optional: host model and make endpoint available

Use model for inference aka predictions

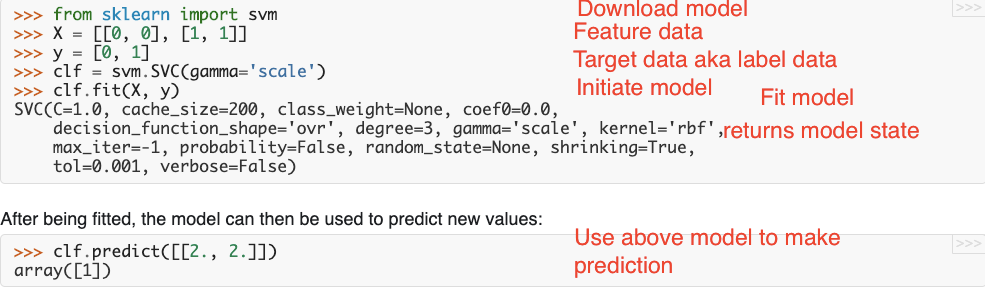

A concrete example of this workflow can be illustrated with this scikit-learn code pattern. Scikit-learn is a popular data cleaning and classical machine learning library.

Remem

Remember Machine Learning is letting lots of data flow through proven model architectures and change its parameters in the training process (when the machine aka algorithm is actually learning!), and use this optimized model for prediction.

Below we introduce for the first time some key concepts. Remember the loss function and the optimizer is what the model uses to updates its parameters — aka learn!

(The above looks like a lot of code, but it is actually quite easy. In all of scikit-learn aka sklearn, you just have to import the model, fit it with data, store it in a variable and use it for prediction. That’s all the above codes do. )

Machine learning concepts

Input data: features vs labels

Data cleaning, representation, feature selection, feature engineering

Training, testing, validation datasets

Training

Loss function (algorithms use these two important steps to update weights- an important model parameter)

Optimizer, gradient (algorithms use these two important steps to update weights- an important model parameter)

Cross validation

Prediction, inference

Caveats: generalization, prevent overfitting, regularization

Deep learning neural nets

Common Machine Learning Patterns

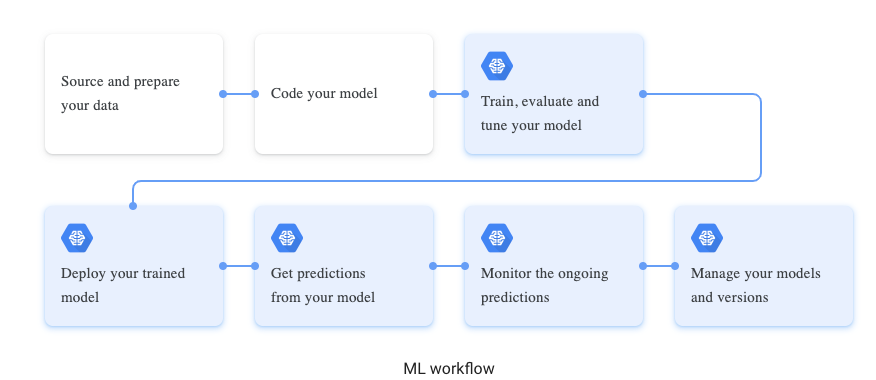

Machine learning workflow as described by Google.

Note in reality it is rarely a linear process. The source and prepare your data, training and evaluating are loops that take many iterations to perfect.

While we cannot possibly cover all that in the newsletter nor prepare you to be full time machine learning engineering. We can shed some lights on how these components work intuitively. Stay tuned.

Why so many synonyms and akas? Machine learning before it was hot in AI, self driving cars, and deep learning, has been and continues to be a very interdisciplinary study. Words come from statistics, economics, maths, computer engineering and data science.

Types of Machine Learning

Supervised Learning

Unsupervised Learning

Reinforcement Learning

Fields of Machine Learning

Deep Learning

Computer Vision in Deep Learning

Generative Adversarial Network GAN in Deep Learning

Self Driving Car specialization of reinforcement learning

Post under construction. Check back on uniqtech.substack.com often for updates. Interested in reading some of our highly rated medium articles for free? Email us hi@uniqtech.co